AI benchmark for personal computers

MLPerf Client

MLPerf Client is a benchmark developed collaboratively at MLCommons to evaluate the performance of large language models (LLMs) and other AI workloads on personal computers–from laptops and desktops to workstations. By simulating real-world AI tasks it provides clear metrics for understanding how well systems handle generative AI workloads. The MLPerf Client working group intends for this benchmark to drive innovation and foster competition, ensuring that PCs can meet the challenges of the AI-powered future.

The large language model tests

The LLM tests in MLPerf Client v1.0 make use of several different language models. Large language models are one of the most exciting forms of generative AI available today. LLMs are capable of performing a wide range of tasks based on natural-language interactions. The working group chose to focus its efforts on LLMs because there are many promising use cases for running LLMs locally on client systems, from chat interactions to AI agents, personal information management, and more.

The benchmark includes multiple tasks that vary the lengths of the input prompts and output responses to simulate different types of language model use.

| Tasks | Model | Datasets | Mode | Quality |

|---|---|---|---|---|

| Code analysis Content generation Creative writing Summarization, light Summarization, moderate Summarization, intermediate* Summarization, substantial* | Llama 2 7B Chat Llama 3.1 8B Instruct Phi 3.5 Mini Instruct Phi 4 Reasoning 14B* | OpenOrca, GovReport, MLPerf Client source code | Single stream | MMLU score |

The tasks and models marked as experimental are not part of the base benchmark. They are included as optional extras that can stress a system in different ways, and they may not be fully optimized on all target systems. Users may run these experimental components in order to see how systems handle these workloads. Please note that these AI LLM workloads tend to require a relatively large amount of available memory, so the tests–and the experimental ones in particular–may not run successfully on all client-class systems.

For more information on how the benchmark works, please see the Q&A section below.

System requirements and acceleration support

Supported hardware

- AMD Radeon RX 7000 or 9000 series GPUs with 8GB or more VRAM (16GB for experimental prompts)

- AMD Ryzen AI 9 series processors:

- 16GB or more system memory for GPU-only configs (32GB for experimental prompts)

- 64GB or more system memory recommended for hybrid configs. (See known issues for 32GB systems.)

- Intel Arc B series GPUs with 10GB or more VRAM

- Intel Core Ultra series 2 processors (Lunar Lake, Arrow Lake) with 16GB or more system memory (32GB for experimental workloads)

- NVIDIA GeForce RTX 2000 series or newer GPUs with 8GB or more VRAM (16GB for experimental prompts)

- Qualcomm Snapdragon X Elite platforms with 32GB or more system memory

Other requirements

- Windows 11 x86-64 or Arm or macOS 15.5 – latest updates recommended

- On Windows systems, the Microsoft Visual C++ Redistributable must be installed

- 200GB free space required on the drive from which benchmark will run

- 400GB free space recommended for running all models

- We recommend installing the latest drivers for your system

- For AMD NPUs, please use NPU driver version 32.0.203.280 or newer

- For AMD GPUs, please use GPU driver version 32.0.21013.1000 or newer from here

- For Intel NPUs, please use NPU driver version 32.0.100.4181 or newer from here

- For Intel GPUs, please use GPU driver version 32.0.101.6972 or newer from here

- For NVIDIA GPUs, please use the latest driver version from here

- For Qualcomm NPUs, please use NPU driver version 30.0.140.1000 or newer

What’s new in MLPerf Client v1.0

MLPerf Client v1.0 has a host of new features and capabilities, including:

- An expanded selection of large language models supported now including:

- Llama 2 7B Chat

- Llama 3.1 8B Instruct

- Phi 3.5 Mini Instruct

- Phi 4 Reasoning 14B (as an experimental model)

- New prompt categories

- Code analysis prompts

- Longer 4K and 8K text summarization prompts (as an experimental addition)

- Updated hardware support now includes

- AMD NPU and GPU hybrid support via ONNX Runtime GenAI and the Ryzen AI SDK

- AMD, Intel, and NVIDIA GPU support via ONNX Runtime GenAI-DirectML

- Intel NPU and GPU support via OpenVINO

- Qualcomm Technologies NPU and CPU hybrid support via Qualcomm Genie and the QAIRT SDK

- Apple Mac GPU support via MLX

- Several new experimental hardware acceleration options

- Intel NPU and GPU support via Microsoft Windows ML and the OpenVINO execution provider

- NVIDIA GPU support via Llama.cpp-CUDA

- Apple Mac GPU support via Llama.cpp-Metal

- Updated operating system support

- Microsoft Windows x64

- Microsoft Windows on Arm

- Apple macOS

- Other features

- A CLI version of the benchmark for easy scripting and automation

- A beta GUI version of the benchmark for all supported platforms with the following features:

- Easy selection and running of multiple models and configs in sequence

- Real-time readouts of compute and memory utilization as the benchmark tests run

- Results history with comparison tables across selected result sets

- Results exports in CSV format

Known issues in v1.0

- When running the benchmark on a Windows 11 system with multiple Intel GPUs (for instance, an integrated GPU and a discrete GPU) via the experimental Windows ML-OpenVINO path, the benchmark may run twice on one device and fail to report the correct GPU used. The suggested workaround is to disable one of the GPUs temporarily in the Windows Device Manager or from the system UEFI in order to ensure that the test runs on the other GPU.

- Some Windows 11 systems with AMD Ryzen AI 9 series processors and 32GB of memory may not be able to run the NPU-GPU hybrid configs successfully. The following workaround should allow the hybrid configs to run successfully on the CLI version of the benchmark. Please note: only advanced users should attempt this workaround.

- Open the Registry Editor

- Navigate to HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\GraphicsDrivers

- Right click on “GraphicsDrivers” in the left pane and select New > Key

- Enter “MemoryManager” for the key name

- Right click on the right pane under “MemoryManager” and select New > DWORD (32-bit) Value

- Enter “SystemPartitionCommitLimitPercentage” for the value name

- Double click “SystemPartitionCommitLimitPercentage” in the right pane

- Enter decimal “60” for the value

- Close the Registry Editor

- Reboot and run the benchmark

Downloading the benchmark

The latest release of MLPerf Client can be downloaded from the GitHub release page. The end-user license agreed is included in the download archive file.

The public GitHub repository also contains the source code for MLPerf Client for those who might wish to inspect or modify it.

More information about how to run the benchmark is provided in the Q&A section below.

Common questions and answers

How does one run the benchmark?

On Windows 11, the benchmark requires the installation of the Microsoft Visual C++ Redistributable, which is available for download here. Please install this package before attempting to run MLPerf Client.

The MLPerf Client benchmark is distributed as a Zip file. Once you’ve downloaded the Zip archive, extract it to a folder on your local drive. Inside the folder you should see a few files, including the MLPerf Client executable and several directories containing JSON config files for specific brands of hardware.

To run MLPerf Client, open a command line instance in the directory where you’ve unzipped the files. In Windows 11 Explorer, if you are viewing the unzipped files, you can right-click in the Explorer window and choose “Open in Terminal” to start a PowerShell session in the appropriate folder.

The JSON config files specify the acceleration paths and configuration options recommended by each of the participating hardware vendors.

To run the benchmark, you need to call the benchmark executable while using the -c flag and specify

ing which config file to use. If you don’t use the -c flag to specify a config file, the benchmark will not run.

The commands in question are:

mlperf-windows.exe -c </path/to/config-filename>.json

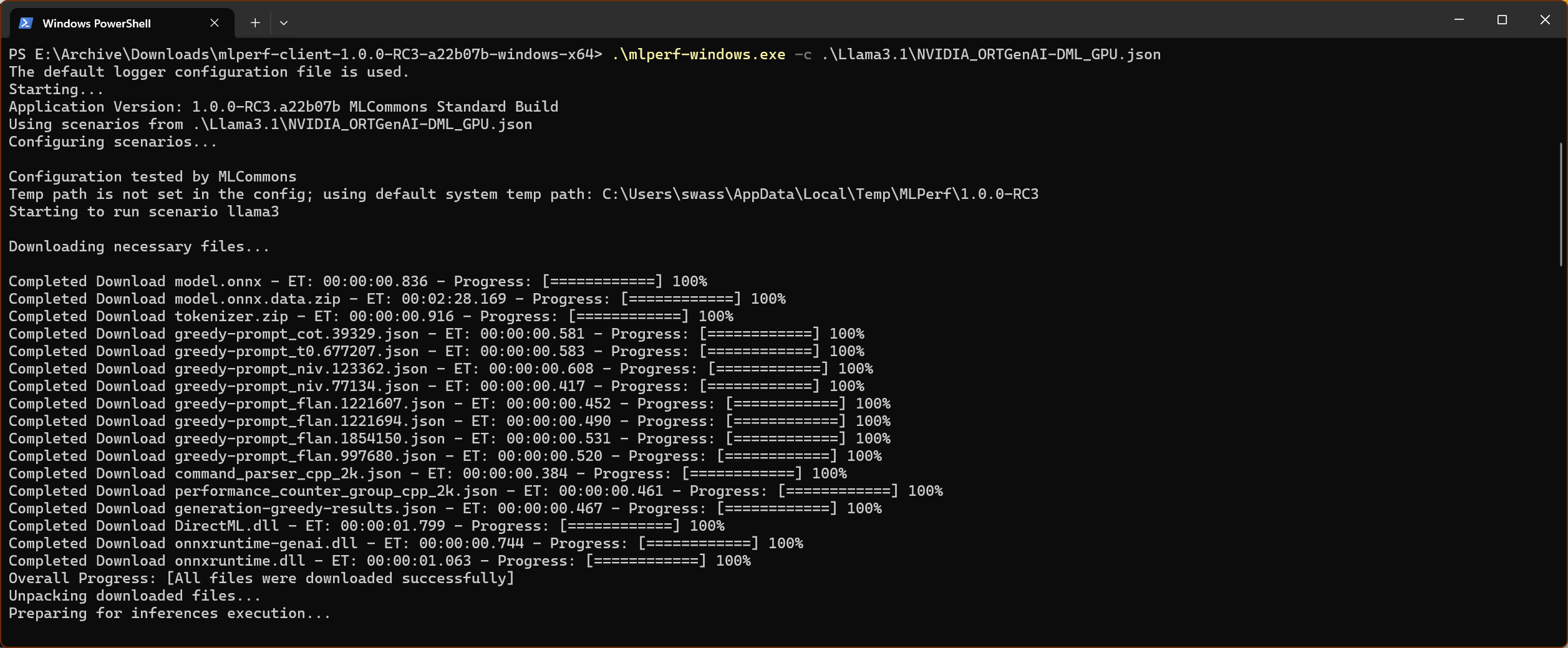

So, for instance, you could run Llama 3.1 8B Instruct via the NVIDIA ONNX Runtime DirectML path by typing:

mlperf-windows.exe -c \Llama3.1\NVIDIA_ORTGenAI-DML_GPU.json

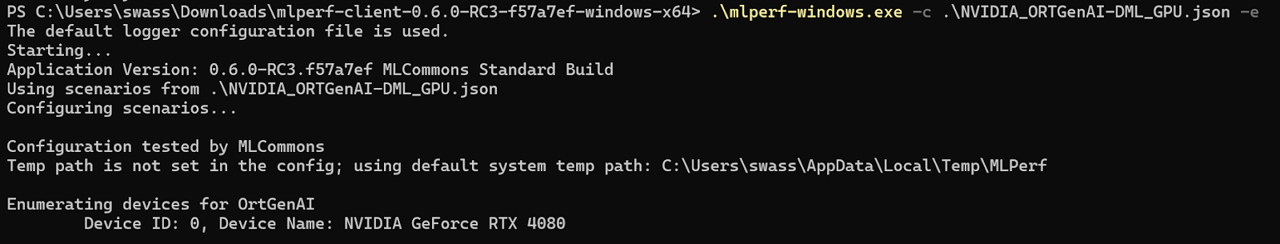

Here’s how that interaction should look:

Once you’ve kicked off the test run, the benchmark should begin downloading the files it needs to run. Those files may take a while to download. For instance, for the ONNX Runtime GenAI path, the downloaded files will total just over 7GB.

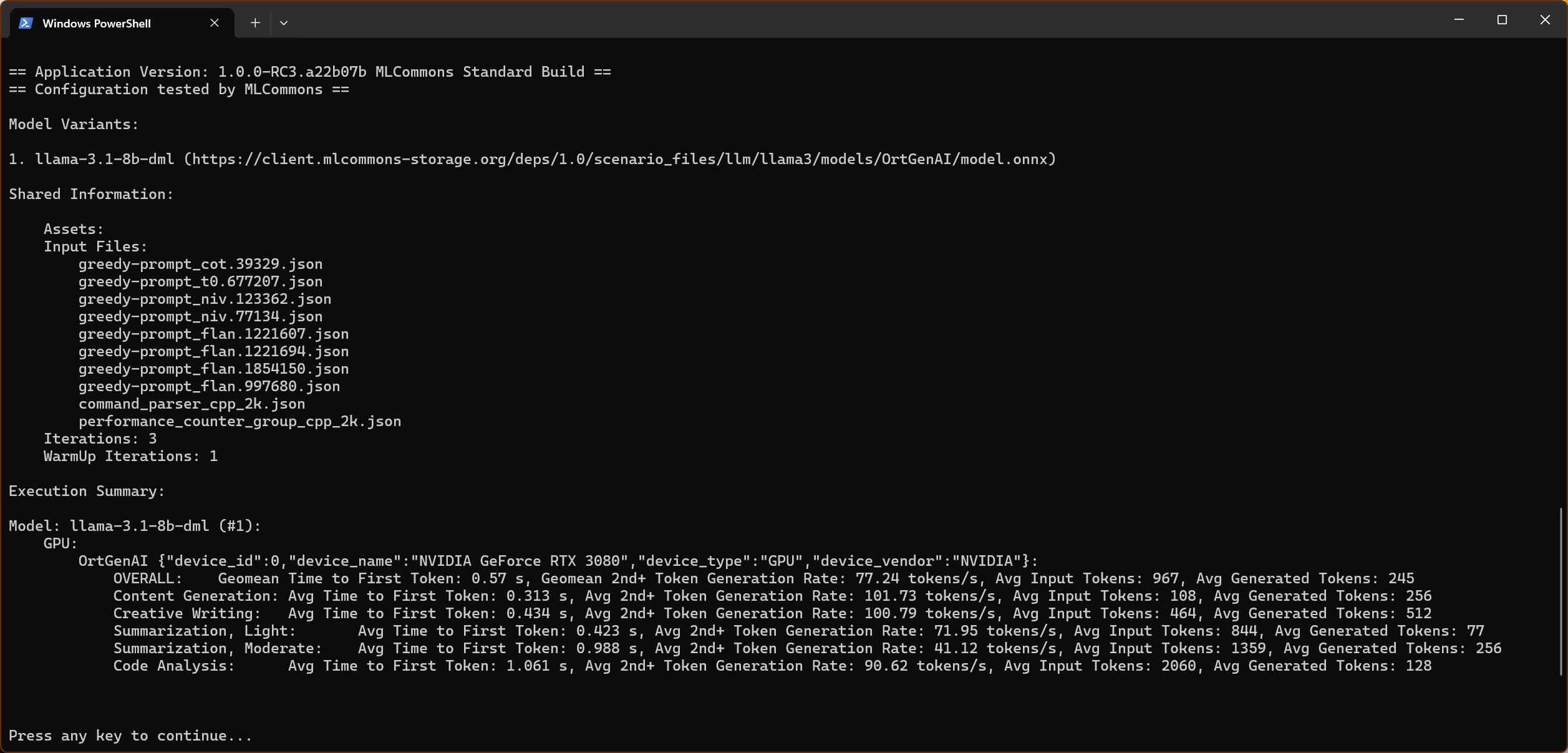

After the downloads are complete, the test begins automatically. While running, there is a progress bar indicating the stage of the benchmark. When complete, the results are displayed as below.

What are these “configuration tested” notifications?

The MLPerf Client benchmark was conceived as more than just a static test. It offers extensive control and configurability over how it runs, and the MLPerf Client working group encourages its use for experimentation and testing beyond the included configurations.

However, scores generated with modified executables, libraries, or configuration files are not to be considered comparable to the fully tested and approved configurations shipped with the benchmark application.

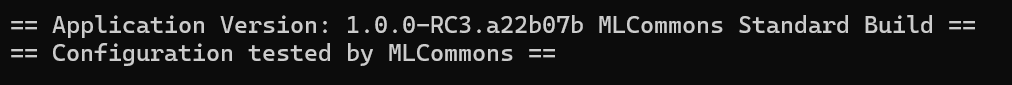

When the benchmark begins to run with one of the standard config files, you should see a notification in the output that looks like this:

This “Configuration tested by MLCommons” note means that both the executable program and the JSON config file you are using have been tested and approved by the MLPerf Client working group ahead of the release of the benchmark application. This testing process involves peer review among the organizations participating in the development of the MLPerf Client benchmark. It also includes MMLU-based accuracy testing. Only scores generated with this “configuration tested” notice shall be considered valid MLPerf Client scores.

Why are some portions of the benchmark marked as “experimental?”

This release of MLPerf Client includes several components that are considered experimental additions. They ship with the benchmark, but for one reason or another, they are not yet ready to be incorporated into the base set of tests and configs. Users may test and produce results from these components, but they do not yet rise to the standard of official “tested by MLCommons” configurations that are fully optimized and vetted by the MLPerf Client working group.

Any results from experimental components will be clearly marked as such when the benchmark outputs them. We would ask end users to note the experimental nature of any of these results they share or publish.

Including experimental elements in MLPerf Client allows us to incorporate new and potentially interesting developments in AI sooner than we could otherwise.

The following elements of MLPerf Client v1.0 are considered experimental:

- Longer 4K and 8K prompts – Some new configs are included that request the summarization of longer passages of text, with maximum prompt sizes of about 4K and 8K characters. These configs may surpass the limits of many client systems, but those with capable enough systems can use these prompts to see how their computers handle larger context windows.

- The Phi 4 Reasoning 14B-parameter model – Phi 4 Reasoning 14B is a relatively new model with reasoning capabilities, and it’s almost double the size by parameter count of the other language models in this version of MLPerf Client. This model may not yet be well optimized for many client systems. Also, like the long-context prompts, this model may not run on some systems due to its steep memory and computational requirements.

- The Windows ML execution path – Windows Machine Learning (ML) helps application developers run ONNX models locally across the entire variety of Windows PC hardware. The Windows ML APIs are currently considered experimental by Microsoft and are not supported for use in production environments. We have an early preview of Windows ML in the form of an experimental execution path and configs for Intel systems via the OpenVINO execution provider. Please note that performance may change between this experimental version and the final release of the new Windows ML APIs.

- The Llama.cpp path – The Llama.cpp path runs on Mac GPUs via Metal and on Nvidia GPUs in Windows via CUDA.

Because experimental components are not part of the base benchmark, they are limited in some ways in how they contribute to reported scores. For instance, 4K and 8K prompts are reported separately and do not contribute to the overall score calculated across prompt categories for each model.

Which elements of the benchmark comprise the official, non-experimental set of tests?

Here is an overview of the prompts and models in this release of MLPerf Client.

Models for v1.0 include:

| Model | Type |

|---|---|

| Llama 2 7B Chat | Base |

| Llama 3.1 8B Instruct | Base |

| Phi 3.5 Mini Instruct | Base |

| Phi 4 Reasoning 14B | Experimental |

Prompt categories for v1.0:

| Prompt Category | Type | Contributes to overall score? |

|---|---|---|

| Code Analysis | Base | Yes |

| Content generation | Base | Yes |

| Creative writing | Base | Yes |

| Summarization, Light | Base | Yes |

| Summarization, Moderate | Base | Yes |

| Summarization, intermediate | Experimental | No |

| Summarization, substantial | Experimental | No |

What do the performance metrics mean?

The LLM tests produce results in terms of two key metrics. Both metrics characterize important aspects of the user experience.

Time to first token (TTFT): This the wait time in seconds before the system produces the first token in response to each prompt. In LLM interactions, the wait for the first output token is typically the longest one in the ensuing response. Following widespread industry practice, we have chosen to report this result separately. For TTFT, lower numbers–and thus lower wait times–are better.

Tokens per second (TPS): This is the average rate to produce all of the rest of the tokens in the response, excluding the first one. For TPS, higher output rates are better.

In a chat-style LLM usage, TTFT is the time you wait until the first character of the response appears, and tokens/s is the rate at which the rest of the response appears. The latency for a complete response is TTFT + TPS * (# of tokens in the response).

What are the different task categories in the benchmark?

Here’s a look at the token lengths for the various categories of work included in the benchmark:

| Category | Approximate input tokens | Approximate expected output tokens |

|---|---|---|

| Content generation | 128 | 256 |

| Creative writing | 512 | 512 |

| Summarization, Light | 1024 | 128 |

| Summarization, Moderate | 1566 | 256 |

| Code Analysis | 2059 | 128 |

| Summarization, intermediate* (~4K) | 3906 | 128 |

| Summarization, substantial* (~8K) | 7631 | 128 |

* Experimental prompts

You may find that hardware and software solutions vary in their performance across the categories, with larger context lengths being more computationally expensive.

All of the input prompts used in the base categories come from the OpenOrca data set with the exception of the code-analysis prompt set, which we created from our own open-source code repository. In similar fashion, the experimental categories contain prompts from our own code repository and from the Government Report data set.

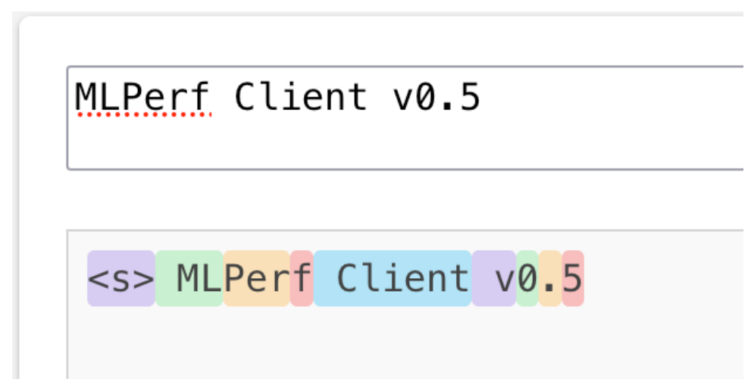

What is a token?

The fundamental unit of length for inputs and outputs in an LLM is a token. A token is a component part of language that the machine-learning model uses to understand syntax. Tokens can be words, parts of words, or punctuation marks. For instance, complex words like “everyone” could be made up of two tokens, one for “every” and another for “one.”

An example of tokenization. Each colored region represents a token.

As a rule of thumb, 100 tokens would typically translate into about 75 English words. This tokenizer tool will give you a sense of how words and phrases are broken down into tokens.

How is the benchmark optimized for client systems, and how do you ensure quality results?

Many generative AI models are too large to run in their native form on client systems, so developers often modify them to make them fit in the memory and computational footprints required. This footprint reduction is typically achieved through model quantization–by changing the datatype used for model weights from the original 16- or 32-bit floating-point format (fp16 or fp32) to something more compact. For LLMs in client systems, the 4-bit integer format (int4) is a popular choice. Thus, in MLPerf Client v1.0, the weights in the language models have been quantized to the int4 format. This change allows the models to fit and function well on a broad range of client devices, from laptops to desktops and workstations.

That said, reducing the precision of the weights can also come with a drawback: a reduction in the quality of the AI model’s outputs. Other model optimizations can also impact quality. To ensure that a model’s functionality hasn’t been too compromised by optimization, the MLPerf Client working group chose to impose an accuracy test requirement based on the MMLU dataset. Each accelerated execution path and model included in the benchmark must achieve a passing score on an accuracy test composed of the prompts in the MMLU corpus.

For MLPerf Client v1.0, the accuracy thresholds are as follows:

| Model | Threshold |

|---|---|

| Llama 2 7B Chat | 43 |

| Llama 3.1 8B Instruct | 62 |

| Phi 3.5 Mini Instruct | 59 |

| Phi 4 Reasoning 14B | 70 |

The accuracy test is not part of the standard MLPerf Client performance benchmark run because accuracy testing can take quite some time. Instead, the accuracy test is run offline by working group participants in order for an acceleration path to qualify for inclusion in the benchmark release. All of the supported frameworks and models have been verified to achieve the required accuracy levels.

Does the benchmark require an Internet connection to run?

Only for the first run with a given acceleration path. After that, the downloaded files will be stored locally, so they won’t have to be downloaded again on subsequent runs.

Do I need to run the test multiple times to ensure accurate performance results?

Although it’s not visible from the CLI, the benchmark actually runs each test four times internally in the default configuration files. There’s one warm-up run and three performance runs. The application then reports the averages of the results of the three performance runs.

My system has multiple GPUs. On which GPU will the test run? Can I specify which GPU it should use?

By default, the GPU-focused configs in MLPerf Client do not specify a particular device on which to run, so the benchmark will run on all available GPUs, one at a time, and report results for each.

To see a list of the GPUs available to run a particular config, use the -e switch on the command line while specifying the config as usual with -c <config name>.

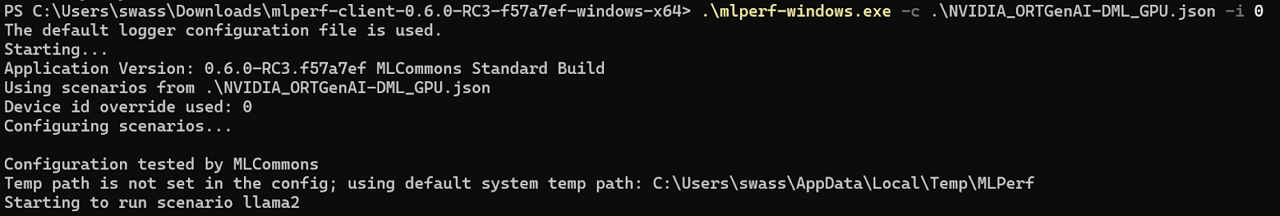

Once you have a list of devices, you can use the -i switch to specify on which device the benchmark should run, like so:

The benchmark should then run only on the specified device.

My system has an integrated GPU from one company and a discrete GPU from another. How should I run the benchmark?

The preferred path for each vendor is using its own config file. So, for example, we recommend using the Nvidia ORT GenAI config file to run on the discrete GeForce GPU while using the AMD ORT GenAI GPU config file to run on the integrated AMD GPU.

See above for instructions on how to specify which GPU the benchmark should use with each config.

The benchmark doesn’t perform as well as expected on my system’s discrete GPU. Any ideas why?

See the questions above about the benchmark running on multiple devices. Make sure the benchmark results you are seeing come from your system’s discrete GPU, not from an integrated graphics processor. If needed, specify the device as described above to ensure you’re testing the right GPU.

How can I contribute to the ongoing development of MLPerf Client?

For details on becoming a MLCommons member and joining the MLPerf Client working group, please contact [email protected].